Data quality audit highlights: Cambri and our global panel provider partners

Data insights

Summary

We want to provide our clients premium quality consumer insights. By premium quality, we mean consumer respondents pay attention and provide meaningful open-ended feedback, they don't speed through the survey, and there are no bots answering.

We carried out a research project (data quality audit) in cooperation with a global software provider focusing on survey data quality measurement. We compared how high (good) the data quality of our proprietary automated data quality solution was vs the global service provider. We ran identical product concept tests in identical target groups in the UK market. We sourced 2000 consumer respondents from our two preferred global panel providers, Cint and another global partner.

We are extremely happy to report that our data quality, with our inbuilt data quality solution, is at the same high level as that offered by the global data quality software. In addition, both our panel providers performed well in evaluation, with Cint performing better than the other global partner.

Our clients want to make data-driven product and brand decisions with confidence. This means they demand high survey data quality from us.

Cambri’s data quality objectives:

- Cambri survey data quality is premium. It equals the quality provided by top class commercially available survey data quality solutions.

- Cambri survey data quality is panel-agnostic. Irrespective of the panel provider (Partner) we work with, our clients get the same high level of data quality and can trust Cambri as an end-to-end consumer insights and concept testing solution.

High data survey quality means:

- No fraud. We get answers from real and unique respondents - no duplicate completes. Similarly, there are no bots answering our surveys, pretending to be human respondents.

- Respondents devote required attention to Cambri tests and studies. No speeders: respondents do not answer too quickly. No straight-liners: respondents do not tick the same options (e.g. all answers are 1s or 2s). Open-ended answers are largely meaningful and do not include gibberish.

- Matching concept test results irrespective of the panel provider. KPI scores such as Purchase Intent are the same among panel providers in the same target group using the same device (e.g. mobile phone).

Cambri data quality audit: what we did and why

We wanted to understand how Cambri proprietary data quality solution performs compared to specialised commercial survey data quality solutions and whether we needed to switch to an external software provider. We decided to compare our proprietary data quality solution against a globally renowned data quality solution, used by global market research agencies and panel providers. At their request, we have anonymised this solution and will refer to them as “External Solution”. In practice, this means our technology team plugged the External Solution’s API into our consumer insights and concept testing platform.

Consumer research context and target group

We conducted a product concept test in the instant noodles category. Noodelist brand offers premium plant-based instant noodles and has the core value proposition of promising healthy and nutritious noodles. In the product concept test, we validated consumer aims and pain points, product value proposition and benefits, as well as packaging shape and design.

We carried out the product concept test in the UK market among 25 to 54-year-old consumers, using age and gender quotas.

Global panel providers and samples in comparison

On an everyday basis, we work with two global panel providers, and used both in our data quality audit. One is Cint and the other prefers to remain anonymous. Therefore, we will refer to the latter as “Global Partner”.

To isolate the effects of the respondent device, we ran specific tests with respondents using only one device type. In total, we conducted product concept tests with four distinct samples in the UK market, each with a sample size of over 500 completes:

- Cint panel respondents using mobile phone (n=584)

- Cint panel respondents using desktop (n=565)

- Global Partner panel respondents using mobile phone (n=569)

- Global Partner panel respondents using desktop (n=525)

After conducting the test with the samples above, we used the External Solution and Cambri data quality solution to evaluate and compare data quality.

Introducing the External Solution and Cambri data quality solution

The External Solution evaluates data quality using various evaluation scores and provides an overall score at the respondent level. The evaluation scores focus on:

- Speeding respondents: respondents who do not devote enough attention and answer too quickly.

- Duplicate respondents: responses that come from the same machine, detected using IP information. This may also indicate that bots are answering the survey.

- Open-ended response quality: whether text answers are meaningful or contain trash or incomprehensible sentences, are exact duplicates or are copied directly from the internet (which also indicates bad data quality), and if text answers are left empty.

- Other data quality indicators: e.g. whether the time spent on different sections of the survey varies greatly.

The overall score of the External Solution is an aggregate of these scores with different weights and ranges from 0 (worst data quality) to 100 (best data quality).

The Cambri proprietary automated data quality solution focuses on mainly detecting speeding respondents. Based on the average time taken to answer the survey and a quality threshold, Cambri detects those respondents who took too little time in their response. In our current system, Cambri removes (i.e. screens out) such respondents from the test results. Additionally, Cambri uses other measures to remove fraudulent respondents.

Our data quality audit as a step-by-step process

We followed a step-by-step process in our data quality investigation.

Step 1: Exploring overall data quality from different panel providers and devices

We first explored the full dataset (including quality screened out respondents from all 4 samples) with the External Solution to evaluate the overall data quality from panel providers. The External Solution parsed through the data and evaluated both numeric answers and text responses. For simplicity, we included two open-ended questions in the analysis: the first was placed in the middle of the survey and the second was placed towards the end. We checked the overall evaluation scores distribution for all responses, as well as the average score for different samples.

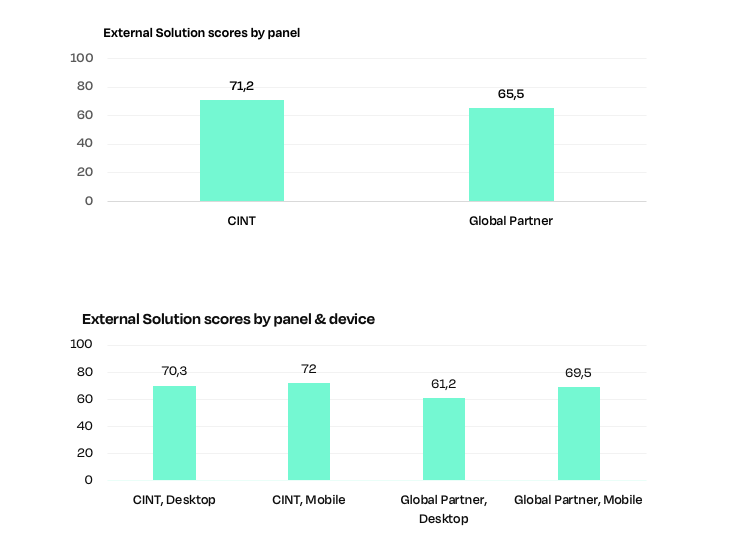

We found that Cint samples have a slightly higher overall data quality score than Global Partner samples, the average score for Cint samples being 71.2 compared to 65.5 for Global Partner. Also, for both panel providers, mobile phone responses (interestingly) have higher data quality than desktop responses.

OVERALL DATA QUALITY SCORES FOR CAMBRI’S GLOBAL PANEL PARTNERS

Full data set, n=2243

Step 2: Screening out bad quality responses using the overall score of the External Solution

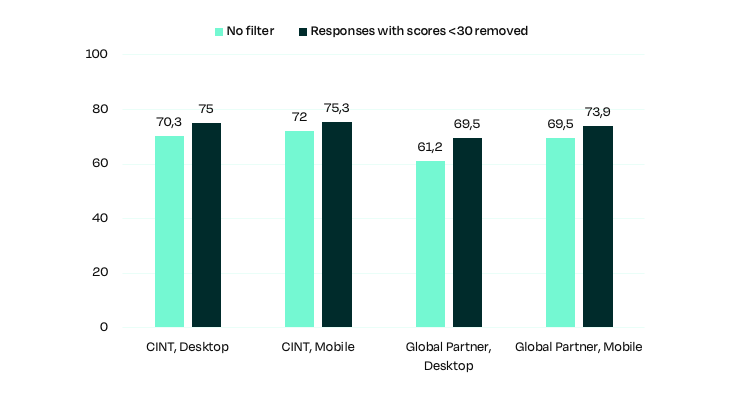

Next, we explored different scenarios where we removed low data quality respondents using the External Solution’s overall quality scores. We found that removing low scored responses (e.g. responses with scores below 30) reduces the data quality differences between panel providers and device type. However, speeding respondents remained a challenging issue and were still present in the quality screened-in data.

OVERALL DATA QUALITY SCORE PER EXTERNAL SOLUTION

n= 2243 (light green) 2050 (dark green)

Step 3: Screening out bad quality responses using Cambri data quality solution

We carried out parallel data quality evaluation on our proprietary data quality solution to get a dataset for comparison. We removed low quality responses using Cambri’s proprietary data quality solution. It screened out 13% of responses from the total dataset.

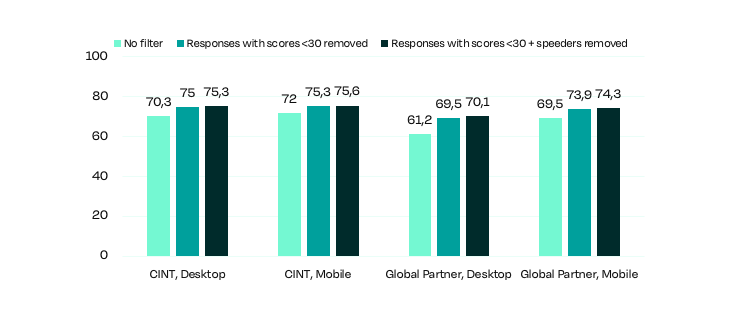

Step 4: Screening out bad quality responses using the overall score of External Solution and their speeder score

- Earlier we had learned that removing low Overall Scored responses (by the External Solution) does not guarantee speeders are removed. Therefore, we now included the External Solution’s speeding filter in the process. We learned that removing the responses whose Overall Quality Score was below 30 (on a 0-100 scale) and removing the speeding responses further improves data quality.

OVERALL DATA QUALITY SCORE PER EXTERNAL SOLUTION

n= 2243 (grey) 2050 (light green) 1952 (dark green)

As a final step, we calibrated the screening out of bad quality responses so that the External Solution removed 13% of bad quality responses from the total dataset. Thereby, we got comparable datasets: 1) Quality checked dataset by the External Solution (n=1952), and 2) Quality checked dataset by Cambri internal solution (n=1945), both screening out 13% of responses from the total dataset. We were now able to compare the outcome of Cambri proprietary data quality against external quality software.

Step 5: Comparing data quality provided by the External Solution and Cambri Internal Solution

We applied the External Solution’s filter as described above and evaluated the data quality of two datasets: 1) Quality checked dataset by the External Solution, and 2) Quality checked data set by Cambri internal solution on the following dimensions:

- KPI scores overall and by panel and device type

- Open-ended answers quality

- Number of detected duplicate respondents in the data

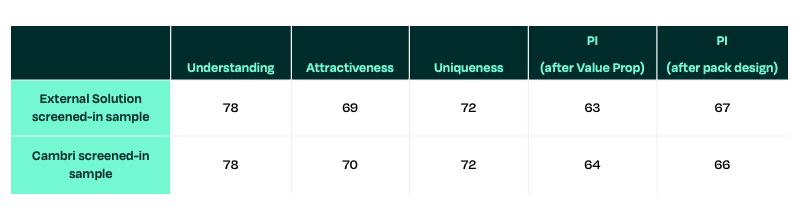

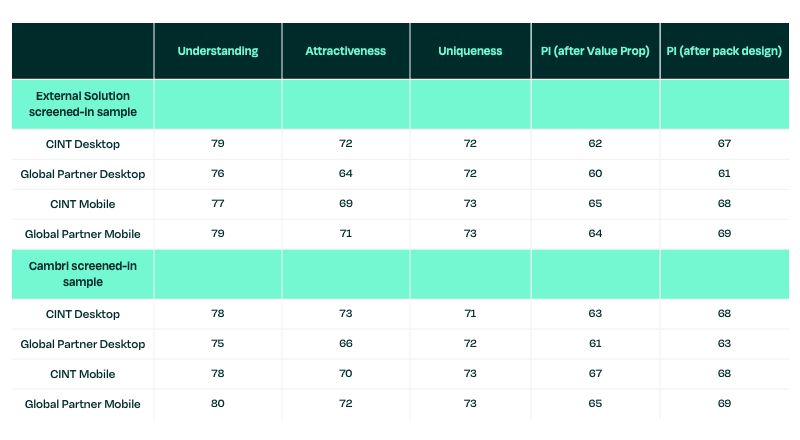

5.1. KPI scores comparison

We compared 5 different product concept test KPI scores of (a) Understanding, (b) Attractiveness, (c) Uniqueness, and (d) Purchase Intent (on seeing value proposition), (e) Purchase Intent (on seeing pack design).

For each KPI, respondents scored on a scale of 1-5 (1 = worst, 5 = best) and reporting was done for the top 2% i.e., the reported KPI is the % of respondents who gave a score of 4 or 5. The results show that for both samples (obtained after removing bad quality data), the KPI scores show little variation and can be considered similar to each other.

In addition, we compared the KPI scores per panel provider and device type and found that, when comparing the same panel and device type KPI, there were no substantial differences in the KPI scores between the two samples.

We can conclude that the External Solution filtering and Cambri filtering perform equally well and give similar KPI scores. This is great news for Cambri and our clients as we want to ensure our clients get high data quality, irrespective of the panel provider.

5.2. Open-ended data quality comparison

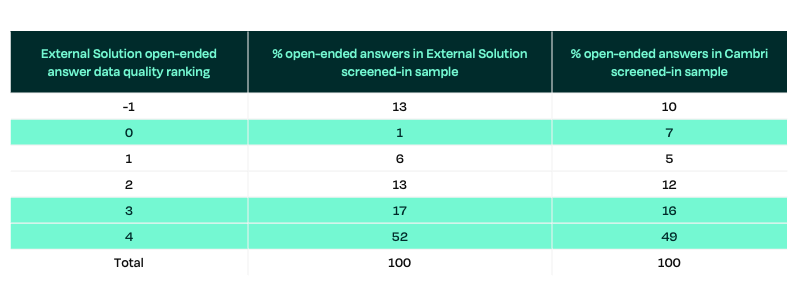

The External Solution ranks the data quality of open-ended responses on a scale of -1 to 4, where -1 = empty answers and 0-4 represent data quality, with 3 and 4 as high data quality answers.

Using the External Solution open-ended answer ranking, we compared the two samples and found, again, that there were no substantial differences in data quality. The % of answers with a ranking of 3 and 4 (i.e., high quality answers) were similar in both samples (65-69%).

However, the External Solution filtering was more efficient at removing nearly all lowest quality (where rank = 0) answers. We wanted to continue our analysis to understand what kind of open-ended answers class as 0 answers, and where and if there is a clear difference in data quality between the External Solution dataset and Cambri solution dataset.

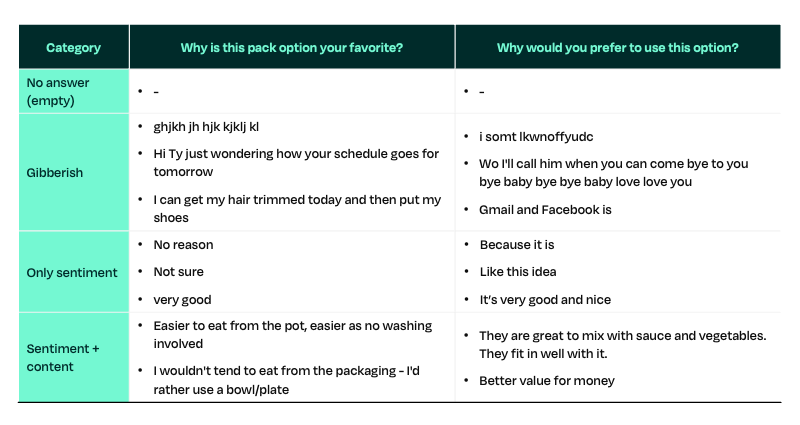

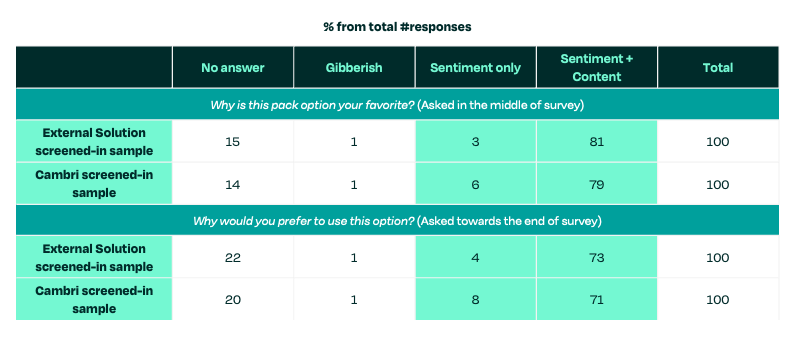

Now, one of Cambri’s experienced researchers manually analysed four categories one-by-one: (a) empty answers, (b) gibberish answers, (c) answers with only sentiment, (d) answers with sentiment and content.

As the table below shows, we found no substantial differences in data quality between the External Solution dataset and Cambri proprietary solution dataset. Both samples had very similar profiles of good quality answers, meaning answers with only sentiment or answers including both sentiment and meaningful content.

From open-ended answer quality comparison, we can conclude that both the External Solution and Cambri internal solution perform equally well and give similar quality open-ended answers.

5.3. Number of detected duplicate respondents

The final parameter we checked was the number of duplicate respondents (i.e., those using same machine, bots). For this analysis, the External Solution provided insights based on IP detection technology.

Duplicate detection showed that:

- Per individual panel provider, there were 0% (0/1149) duplicates for Cint and 0.09% (1/1094) duplicates for Global Partner – almost no duplicate respondents.

- When the data was combined, approximately 3% of respondents were duplicates, meaning they were present in both Cint and Global Partner samples.

- Regarding device types, desktop respondents had a slightly higher duplicate rate of 5%.

This is good news for us and our clients as it shows both our global panel providers, Cint and Global Partner, ensure there are no duplicates in their dataset through data pre-cleaning measures.

Our conclusions

We started our investigation with the overall objective of understanding and benchmarking the data quality provided by Cambri proprietary automated data quality solution and taking improvement measures if needed. Our investigation gave us versatile and reliable insights on the efficacy of Cambri’s own data-quality solution in comparison to widely used External Solution.

Based on our investigation, we conclude that we are confident we are providing high quality survey data to our clients by using our proprietary data quality solution:

- Cambri proprietary data quality solution provides the same quality level as the External Solution.

- Cambri data quality solution is efficient at minimising data quality differences among panel providers.

- When Cambri sources respondents from one panel only for a client project, the Cambri data quality solution adequately provides a high-quality dataset.

Next steps

We want to further develop our data quality measures. We have agreed on the following next steps internally:

- When survey data is sourced from more than one panel provider for the same test, we will ensure that no duplicates are allowed.

- We will use our proprietary Cambri Natural Language Processing solution to track bad quality text answers.

Authors:

Dr Heli Holttinen, Founder & CEO

Dr Apramey Dube, Senior Research Manager

Dr Timo Erkkilä, Cambri AI and Technology Advisor

Roman Nikoleav, Head of Technology

Jani Ollikainen, Senior Software Engineer